Technology drives maximal value to the enterprise, not through wielding the power of specific programming languages, but by composing multiple component technologies into systems that model, automate and deliver complex business processes.

Luckily, there exists the amazing world of Docker, container tools and the ecosystem of images. Collectively, these significantly lower the technical barrier to experimenting with a wide variety of technologies, leading to faster cycles of learning and innovation.

In the container world, open-source communities and vendors provide endless purpose-built images with popular technologies installed and configured to run with minimal effort. Containers can be stitched together and run as Docker Compose services in short order.

Overview of the Demo Application

Here I present an example system providing machine learning based text analytics where a user submits a web page URL to a browser and is presented with measures of sentiment (polarity and subjectivity) along with extracted noun phrases predicted to be semantically meaningful.

Sentiment and noun phrase extraction techniques are often used to classify and flag content for review, follow up and marketing brand management by companies with significant free-text digital content such as product reviews. The system is constructed of multiple containerized technologies and is deployed to a Docker environment running as a set of Docker Compose services.

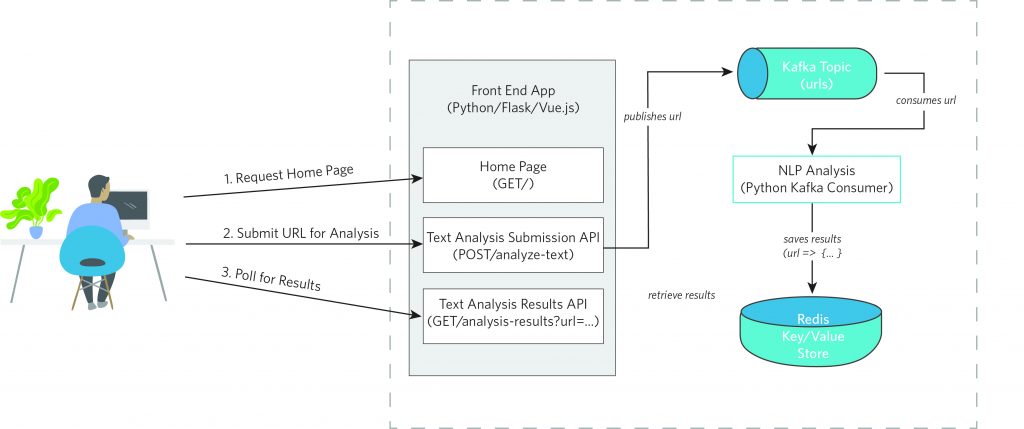

Below is the high-level architecture of the system. The source code can be found on GitHub.

The front-end application uses the Python-based Flask microframework along with Vue.js for an interactive UI. The URL is submitted via the front end and relayed to a Kafka topic. A decoupled containerized Python consumer retrieves the URL from Kafka and performs text analytics using the TextBlob and Natural Language Toolkit.

Once the analytics results are computed, they are saved to a containerized Redis key-value datastore. While this happens, JavaScript in the front end polls a REST API for results matching to the original URL then displays them in the browser.

The Flask app source is contained within the nlpapp/app.py module consisting of a handler function that serves up the UI web page and two REST-based endpoints. One REST endpoint is for handling a POST request with the URL then producing it to the containers running Kafka, and the second REST endpoint is for fetching the results of the analysis.

The next key part is the Kafka consumer within the nlpconsumer/webnlp.py module, which fetches the webpage of the URL pulled from Kafka and uses the TextBlob library for text analysis as shown below. Specifically, the text analysis calculates sentiment metrics of polarity and subjectivity. For polarity, the measures span from -1 (inferring the content is harshly negative) to +1 (inferring a joyous, positive tone) with values near 0 being relatively neutral. The subjectivity score ranges from 0 (inferring factual-based content) to 1 (implying opinion-based reasoning).

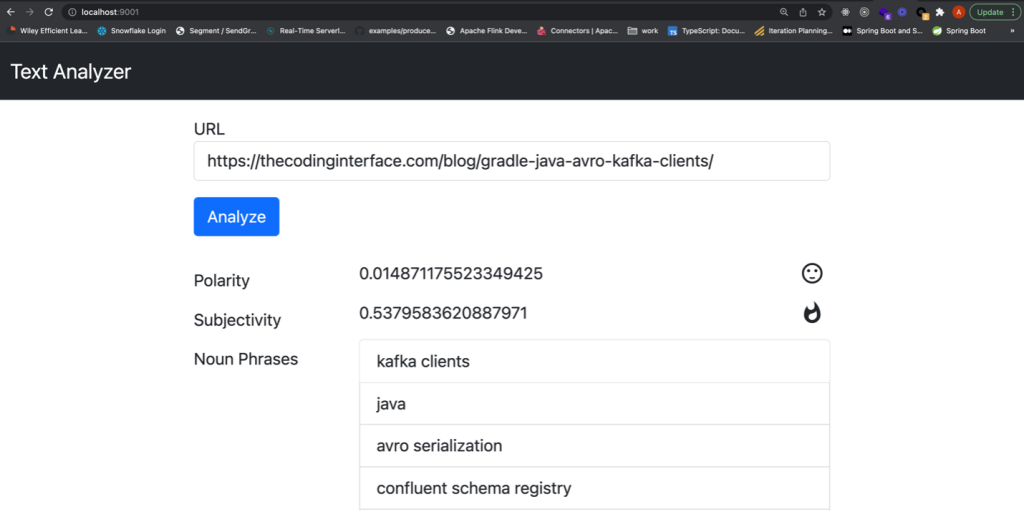

The last component worth discussing is the UI using Vue.js for interactivity, HTML for structure, and Bootstrap CSS for looks, which collectively allows users to add a URL of a web resource to be analyzed. A screenshot of the UI can be seen below, and the code is primarily contained in a Jinja template.

Running on Docker

Now that the basic components have been discussed, I can demonstrate what is needed to stitch the pieces together in Docker containers and run them locally with Docker Compose.

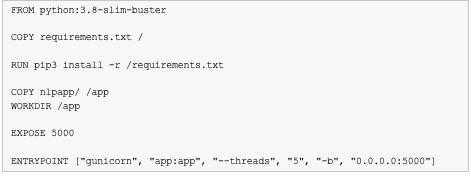

The front-end app is containerized per the instructions in the Dockerfile shown below. This packs up the previously presented Flask microframework web application along with the other required Python dependencies and launches it using the Gunicorn application server.

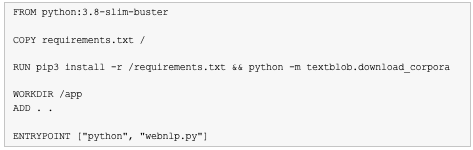

The Kafka consumer and subsequent text analytics program is similarly containerized per the following Docker file. Here the various Kafka and text analytics libraries are packaged into the container along with the Python consumer source code and launched as a standalone program.

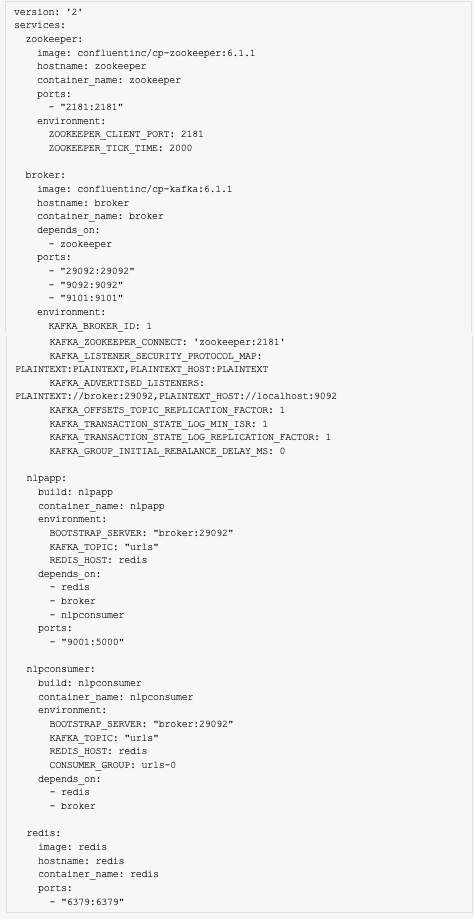

The remaining components are the Kafka message bus cluster and a Redis key/value store, which are normally fairly complex pieces of technology to install and configure. However, Confluent provides open-source community licensed images for both Zookeeper and the Kafka broker. The Redis open-source project provides a similarly well-established image. All that remains is to assemble a docker-compose.yml file, which composes the various containers into a single deployable unit as shown below.

Execute the following command in the same directory as this project’s docker-compose.yml file.

With that single command, the Docker Compose services are launched. After a minute or two, I can point my browser to http://127.0.0.1:9001 and I’m presented with the demo app.

Pretty amazing, right?

Conclusion

In just a few hundred lines of code, plus a couple of Docker files and some YAML, I was able to cobble together a reasonably complex proof of concept style demo application. In doing so I got a feel for event-driven architecture, Redis key-value datastore, Kafka-based pub/sub messaging, Python REST APIs and text analytics.

However, the learning doesn’t need to stop there. One could experiment with swapping out the Kafka message bus with something else like Celery and RabbitMQ or even just Celery with Redis. Similarly, I could spin up a MongoDB or Cassandra database to take the place of the Redis key/value store or swap out the front end from a Python Flask implementation to Java Springboot.

Related Resources

Use Cases for Sentiment Analysis in Marketing Operations

Sentiment Analysis: Extracting Emotion Through Machine Learning TEDx

The Many Meanings of Event-Driven Architecture – Martin Fowler